We’ve been told that AI is replacing developers, driving layoffs, and ushering in a radically efficient new future. But the truth is far more boring, far more revealing, and far more predictable.

One of the real triggers for mass tech layoffs wasn’t AI. It was a quiet tax code change. Section 174 of the US Internal Revenue Code, modified in 2017 under the Trump administration, now requires companies to amortize R&D costs over five years rather than deducting them up front. The result is that big tech companies had to suddenly “get lean”. Enter layoffs, spun as strategic AI pivots1.

In other words, AI didn’t cause tech layoffs. It offered a convenient and compelling narrative. It’s far more grindset-approved to tell investors and the press that you’re embracing “radical innovation” or launching “the year of efficiency” than to admit, “Remember when we backed the Trump tax cuts? Turns out his changes to Section 174 just nuked our entire operating model. Now we have to lay off 20 percent of our developers ASAP.”

Even non-American tech companies got hammered by the ripple effects. When margins collapse, so do B2B sales, international hiring, and partner budgets. The whole ecosystem tightens like a vice. VCs vanished into a dark place with no profits and no hype, until the ritual chants of “AI” began.

Suddenly, under the spell of this dark art, every zombiecorn twitched back to life with nothing more than an “AI” incantation slapped onto their tagline. VCs emerged from hibernation and began petting them again. Good AI zombiecorn, so pretty. We shall call this the ritual of Bernaysian necromancy.

This isn’t a one-off. The mismatch between reality and narrative is one we’ve seen across history, from the electrical current wars of the 1800s to the VHS vs Betamax saga.

And that’s why I wrote this.

Let’s be real. AI is exciting and already brings some legit value, but much of today’s AI enthusiasm is speculative. No major AI-native company has gone public with sustained success. High-quality FOSS contributions from LLMs are rare. Instead, as you’d expect, AI is augmenting the actual skill of skilled developers. “AI-driven” (end-to-end) pull requests are absolute slop and require a ton of human intervention to merge. If the hype were real, we’d see a tidal wave of high-quality, automated contributions across the open source ecosystem. We don’t.

Let’s look more closely at how hype is manufactured, and what history can teach us about this moment in time.

Why Does Anyone Eat Bacon for Breakfast?

In the 1920s, the Beech-Nut Company enlisted the help of Edward Bernays, a former wartime propagandist, university professor, and founder of the first US marketing firm, to help them offload surplus bacon. That’s right. Americans love bacon for breakfast because in the 1920s, a company called Beech-Nut (you can’t make this stuff up) had way too much bacon, and not a large enough market to sell it all to.

Bernays understood a simple reality. The public trusted their doctors for dietary advice more than former wartime propagandists.

In a brilliant and diabolical move, Bernays asked his own firm’s on‑staff doctor a leading question: “Would a bigger breakfast be healthier?” The doctor agreed in spirit, offering a casual opinion.

“More energy at the start of the day is a good thing.”

Bernays then ask the firm’s doctor write to 5,000 of his medical colleagues with the same question. Over 4,500 doctors responded in agreement, and the headline practically wrote itself.

“4,500 physicians urge Americans to eat heavy breakfasts to improve their health.”

It was a compelling story, and it soon appeared in newspapers across America as sound medical advice. Conveniently placed alongside these articles were ads for Beech‑Nut Bacon.

What started as a consensus of “casual opinions”, driven by heavy bias and various pressures, was repackaged as peer-reviewed science to sell more bacon. It worked so well that over a hundred years later, bacon remains a staple on American breakfast tables.2

Once Bernays had manufactured the need, the bacon sold itself.

This story about bacon sales isn’t some one-off quirky historical footnote. It shows how Edward Bernays applied the very techniques of propaganda and public relations he had been developing to sell more or less anything, as long as the need was manufactured first.

His work wasn’t really about selling bacon. Bernays couldn’t have cared less about pork products. His real contribution was adapting the propaganda techniques he honed during wartime into tools for business in early industrial America. Bernays didn’t sell to a market, he created the market first, then let people convince themselves they needed what he was offering.

The Origin Story of Public Relations

It’s no accident that Edward Bernays was so successful in offloading surplus bacon for the Beech-Nut Company and changing breakfast in the process. He applied techniques for influencing public opinion that he had already formalized and taught at the university level.

Bernays himself wrote the first academic textbook on the field of public relations, Crystallizing Public Opinion (1923), and taught the first class on public relations at New York University (NYU) that same year.

Edward Bernays was originally a US government propagandist for the Committee on Public Information (CPI) during World War I, just before publishing his textbook and starting to teach public relations. He worked at the CPI alongside another influential propagandist, Ivy Lee, who was the first public relations executive in corporate history for the Pennsylvania Railroad Company. It was Lee who wrote the first job description for a VP-level public relations executive.

Through this formal study and teaching, combined with heavy influence from the emerging fields of psychology and psychoanalysis, the concepts of rational manipulation and engineered consent emerged. These are tactics that have been used in business for over 100 years and have been designed and studied from the very beginning of marketing and public relations as professions. We can see their effects in everything from the public acceptance of smoking to the widespread belief that a nutritious breakfast includes bacon and eggs.

At its core, rational manipulation refers to the calculated shaping of public perception by using logic, authority, or expertise to create a veneer of rationality. The manipulator presents information in a way that seems reasonable and factual, but with the goal of steering audiences toward a predetermined conclusion.

Engineered consent goes a step further. It’s about constructing an environment where the audience not only accepts a given message, but believes they arrived at the decision themselves, freely and rationally. This is the bread and butter of modern marketing, politics, and even corporate tech communications.

The use of these techniques is at the heart of the “modern propaganda” we will discuss, which is essentially what’s been taught and used in professional settings since the 1920s to gain mass influence.

A Working Definition of Propaganda

We previously explored the evolution of public relations and psychoanalysis to show how the history of marketing has shaped nearly every facet of modern life from the cars we drive, to the fashion we favor, to the developer tools we use.

As we continue on this exploration, we need a clear working definition of propaganda to guide us in understanding how propaganda shapes our choices in the modern technical world. When does evangelism stray into propaganda? Where is an ethical line drawn?

- Propaganda is an unethical and manipulative tactic used to sway public opinion to favor the interests of certain individuals or groups.

- Education, by contrast, is a noble endeavor aimed at sharing knowledge, facts, and skills transparently and ethically from one individual or group to another.

While propaganda can sometimes cross legal boundaries and become a criminal act, not all forms of propaganda are illegal, and most propaganda is never criminal. But propaganda always masquerades as education, blurring the lines between indoctrination and instruction.

A helpful way to understand this is to contrast evangelism and advocacy.

Evangelism vs Advocacy

Evangelism is when someone spreads a message on behalf of an institution or authority, often to expand influence or build loyalty. In tech, this is embodied by the Developer Evangelist, who promotes a company’s technologies to generate adoption and enthusiasm.

In contrast, advocacy is fundamentally different. It represents the interests of a community or group, not the institution. Think of a Developer Advocate as someone who stands with developers, fighting for their needs and concerns, more like a union leader than a brand ambassador. True advocacy, one that prioritizes developers’ interests over corporate goals, is more often found in independent open source communities, and are rarely paid roles (unfortunately).

And that’s the catch. In the real world, Developer Advocates rarely, if ever, operate independently of corporate interests. Most “Developer Advocate” roles are situated within Marketing or Developer Relations departments, reporting to the same chain of command tasked with filling the top of the sales funnel.

Increasingly, these roles aren’t even funded by the marketing budget. Instead, executives or founders assume the mantle of “Chief Evangelists”, posturing as “Developer Advocates”, and flip flopping depending on which way the wind blows. One minute these so-called advocates are championing developers, the next laying them off in record numbers.

It’s important to note that evangelism doesn’t imply a lack of ethics. It simply means that professionals in these roles are accountable for KPIs, and increasing the “top of the funnel” has little to do with truly advocating for developers’ interests. This is why most advocacy work is actually evangelism.

Evangelism itself is not unethical. But evangelism becomes propaganda when it trades truth for persuasion.

How and When Ethical Lines Are Crossed

When a message is delivered not to inform, but to manipulate, and does so under the banner of “education”, it’s propaganda.

The essence of propaganda lies in its pretense. Propaganda dresses up persuasion as objective fact, hides bias behind polished language, and turns what should be a genuine exchange of ideas into a calculated performance aimed at shifting public behaviour.

In tech, lines are crossed when thought leaders and developer evangelists present content that appears educational but subtly pushes a predetermined narrative benefiting a company or product, rather than the developer community or society at large. It can be as simple as a company sponsoring posts to promote a technology, without revealing the sponsorship. This practice is becoming increasingly common in LinkedIn and YouTube tech circles, where evangelism shifts from sharing knowledge to selling influence.

The danger isn’t evangelism itself, it’s the erosion of transparency that transforms informed consent into engineered consent.

Replacing Evangelism with Education

There’s a growing awareness of the reputational risks involved in pushing propaganda, and some companies have established educational teams focused on training users in their technology, without being accountable for lead generation. This is a step in the right direction, and it’s an approach I hope more tech companies adopt.

Fast-forward to today, and the propaganda battleground is artificial intelligence. And this time, it’s a bit different, even though I loathe using that phrase, but the scale and centralization of influence are unprecedented.

AI evangelism isn’t coming primarily from developer advocates or marketing teams, it’s coming straight from boardrooms, CEOs, and institutional leaders, amplifying its reach and impact into every corner of society. This top-down evangelism is rare. It’s designed to shape public perception swiftly, leaving little space for critical engagement. Recognizing its tactics is key if we hope to resist being swept up in the hype.

The Propaganda of Modern AI

The term “AI” itself is a chameleon, used differently depending on the speaker’s interests. That’s no accident. The phrase “artificial intelligence” was coined in 1955 by computer scientist John McCarthy in his funding proposal for the Dartmouth Summer Research Project on Artificial Intelligence. McCarthy chose the label because it sounded bold, helpful for attracting grant money without meaning anything specific.34

Today, a Chief Marketing Officer might refer to AI as a broad marketing umbrella for various technologies. A researcher may refer to AI in the pursuit of artificial “general” intelligence. A computer scientist might mean a specific topic like neural nets. Programmers often think of concrete implementations like RAG. The general public pictures chatbots and digital assistants. Investors, meanwhile, see nothing but market potential, spawning curiosities like AI-powered toothbrushes.

Let’s discuss another of these AI-powered curiosities as a vehicle to explore the modern propaganda around AI. Something bound to get a lot of attention is “AI-powered email”. In a recent pitch from YC’s startup showcase, YC leadership demoed an LLM-powered system5 that could replace static filters with a chat-like interface:

“Sort emails from my boss into a VIP folder, route my wife’s messages to family, and dump the rest into ‘later’, all with natural language prompts!”

This sounds cool, but was filtering and categorizing emails really a problem? Repacing deterministic, efficient if/else statements with non-deterministic predictions in the real-time path of a system is not only expensive and unnecessary, but a solution looking desperately for a problem.

Evangelists speak of replacing simple, cheap logic like if/else statements with sprawling, energy-hungry, probabilistic models as not only better, but inevitable.

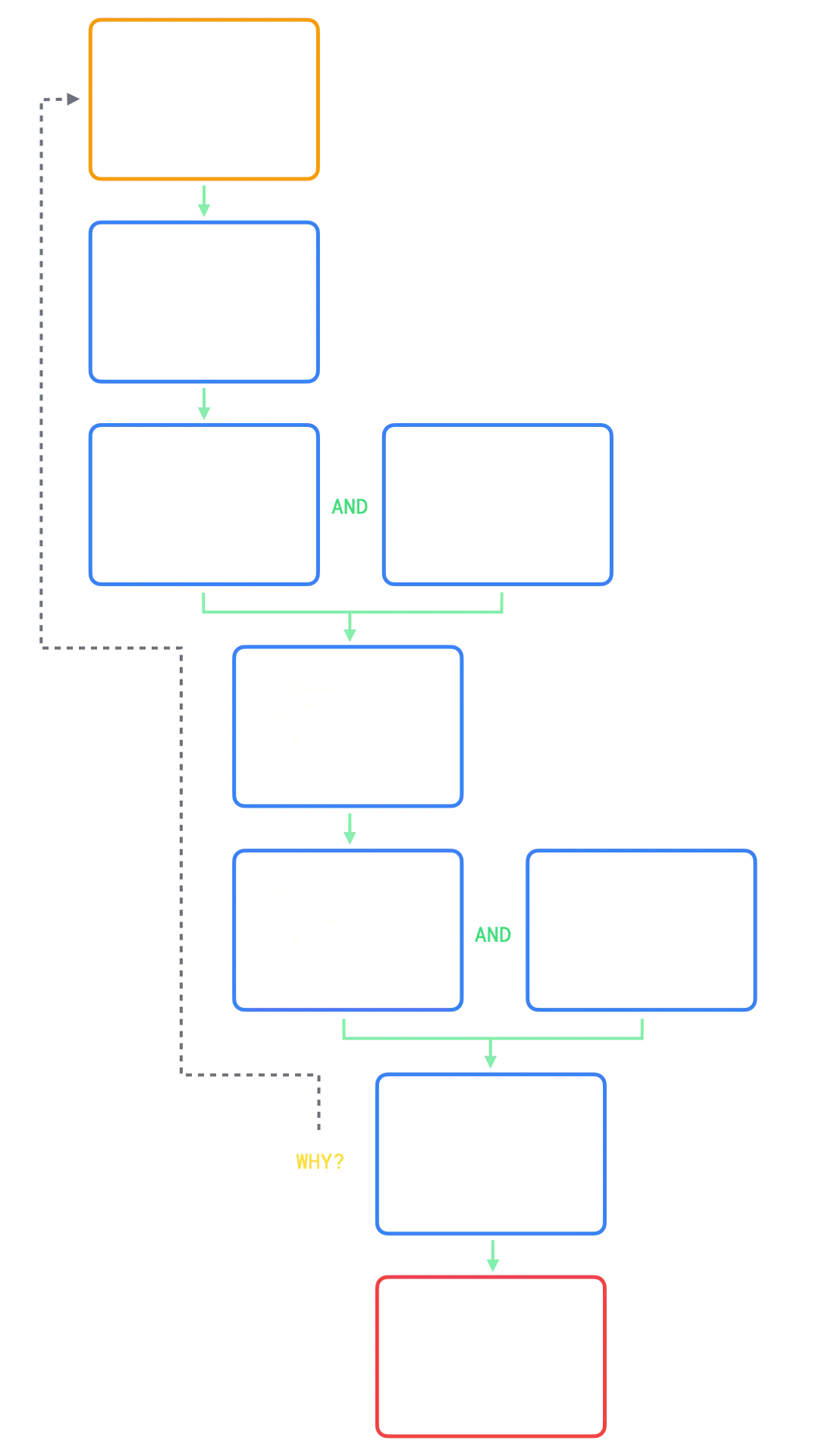

In Bernays’ playbook, this is classic:

- Define the problem

- “Email filters are too rigid.”

- “Programmers are too expensive.”

- Offer the magic solution

- “AI can do all of it much better.”

- Engineer consent

- Flood the market with stories, demos, and proofs-of-concept.

- Make the new normal seem inescapable.

Centuries of propaganda prove that this strategy works. Once the public buys in, competitors will scramble to imitate, and entire industries will reorient around a mirage of intelligent automation.

The actual costs in compute, labour, or long-term sustainability will get papered over by the sheer force of the narrative. And once the damage is done, those who caused the damage are likely to be onto their next venture, selling solutions to all of the problems they created.

Engineering Consent and AI Snake Oil

Edward Bernays’ Beech-Nut campaign was about engineering “consent” through the use of trusted voices. Fast forward a century, and technology vendors are following the same playbook.

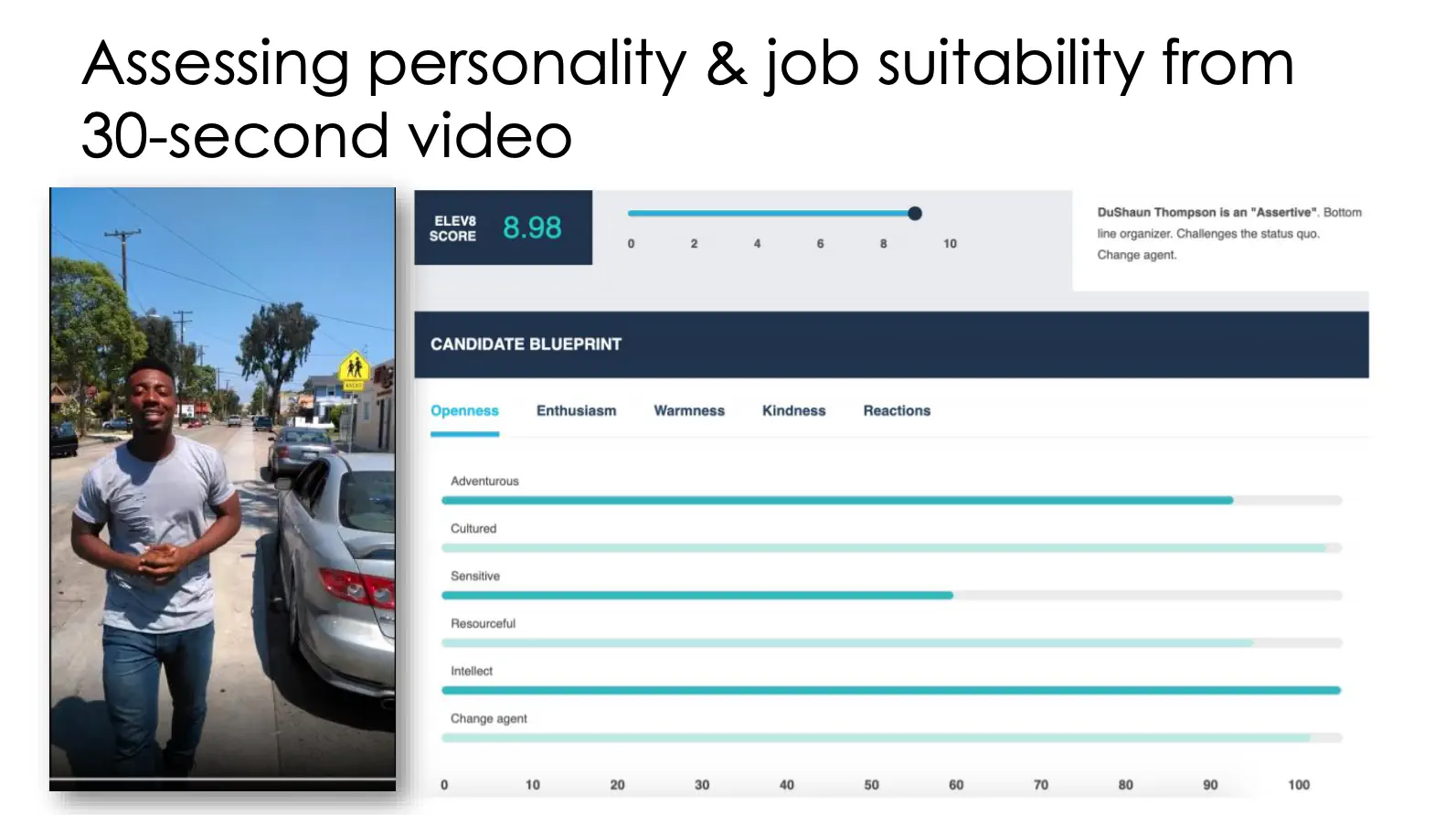

Let’s explore another AI-powered curiosity, AI-powered job recruiting tools. In the world of HR and hiring, companies are slapping the “AI” label on products like video screening tools, claiming that their “sophisticated algorithmic intelligence” can predict future job fit from a candidate’s body language during a single 30-second video clip.

The research paper Mitigating Bias in Algorithmic Hiring: Evaluating Claims and Practices6 cuts through the hype to expose what’s really happening in the AI hiring space, which paints a much different picture than the hype.

The paper reveals the reality that these AI hiring tools are built on big promises but very little substance. Out of 18 vendors reviewed, most refused to share even basic scientific details like what data their models were trained on, how success was measured, or whether the tools worked in the real world. Instead, they relied on vague “AI-powered” buzzwords and proprietary algorithms, shielding their methods from scrutiny and wrapping the whole package in slick marketing language.

When it comes to fairness, the paper reveals that vendors’ claims fall flat. Only 7 out of 18 vendors even mentioned testing for bias (adverse impact). There was no real evidence that these tools reduced bias or improved hiring outcomes. In fact, the study found that many of these models might reflect and reinforce existing biases baked into historical HR data.

Arvind Narayanan’s AI Snake Oil talk unpacks this beautifully.7

“Millions of people applying for jobs have been subjected to these algorithmic assessment systems. They claim to work by analysing body language, speech patterns, etc. Common sense tells you this isn’t possible; AI experts agree. It is essentially an elaborate random-number generator.”

We see the Bernays playbook unfurl to a tee.

- Frame the problem

- “Hiring is subjective and biased.”

- Offer a magic solution

- “AI can automate fairness and objectivity.”

- Engineer consent

- Flood media with case studies and glowing endorsements from “experts”.

- Create an illusion of inevitability.

This is the same engineered consent that Bernays described, where public opinion is shaped by emotional narratives and trusted proxies that cosplay as evidence. Applied to the job market, these tools turn hiring, traditionally governed by human judgement and rules, into an arms race of expensive, compute-intensive guesswork. But it doesn’t stop there. The pattern repeats whenever hype outpaces substance.

To cut through the nonsense, we need to separate the promise of AI from its physics and economics. We’re now poised to trade cheap, deterministic logic for expensive, fragile hallucinations, all because a few glossy marketing campaigns make it feel inevitable.

Today’s AI influencers love to claim that replacing most white-collar work, including developers, is inevitable. This follows the Bernays playbook perfectly:

- Define the problem as unavoidable.

- Sell the solution.

But what’s the real incentive at play? For instance, why push to replace developers with AI?

-

One theory is that as the market becomes less capable of writing code, a few companies stand to make a fortune as the (nearly) sole providers of development capabilities and development tools.

-

Another theory is that we’re simply watching Silicon Valley do what it does, which is to grow at all costs with little concern for the consequences, as long as a handful of people end up obscenely rich.

The contrarian view is more nuanced, and less likely to wind up as a sound bite in mainstream media. Hard skills still matter, and we have no evidence that they don’t.

ThePrimeagen recently put it this way on Lex Fridman’s podcast, “AI is not going to replace all programmers, it’s going to replace the bad ones.” He continued, “If you don’t understand the fundamentals of computer science, if you don’t understand how to write code properly, you’re going to be replaced by someone who does.”

Essentially, these tools are only as good as the person using them. Without a solid foundation of hard skills, people risk being outworked by AI, while companies become trapped in the orbit of proprietary AI systems8. This type of message is more nuanced, which means the relentless propaganda machine will almost certainly drown it out. The consequence of the hype is that fewer students will pursue computer science, and rational manipulators will gain even more power as skills consolidate.

But it doesn’t matter. If developer talent becomes scarce, those who pushed this narrative will be happy to lock you into their proprietary AI-powered “magic code” development tools, I’m sure. It’s simple “attention math”. The doom-saying CEO gets infinite coverage, while the quiet retraction of a false narrative barely registers. By the time the truth surfaces, the damage is done, and those who caused the damage profit.

The Daunting and Real Economics of AI

Let’s visit some of hard costs that are being hand-waved away by the rational manipulators. Karen Hao, author of Empire of AI, warns, “There is no such thing as a free lunch when it comes to AI. These systems take an enormous amount of energy to train and run.” She highlights how “GPT-4 alone used the equivalent of the electricity consumption of 120,000 U.S. homes in a year.” And that’s just one model.

Most U.S. data centers still rely on fossil fuels. While evangelists hype AI-curiosities, the environmental impact of these models is conveniently ignored, hand-waved away in the race to scale bigger and faster before AI does “bad things to us all”9.

In other words, “society, focus on those hypothetical future bad things while we sell things!”, but “please ignore the actual bad things we already have evidence of so we can sell things!”

Let’s run some numbers using the email filtering and classifying example. Filtering email with different methods burns dramatically different amounts of energy. (These estimates are grounded in benchmarks and real-world measurements of inference energy consumption for different architectures. See the references.)

| Scenario | Energy / email |

|---|---|

Deterministic rule (if sender ∈ safeList) |

90 nJ 1 |

| Lean text-classifier (BERT-base, 120M params) | 2 × 10⁻⁶ kWh (≈7.2 J) 10 |

| GPT-4o call (general LLM) | 3 × 10⁻⁴ kWh (≈1,080 J) 1112 |

Deterministic logic is essentially free at scale. Filtering one million messages with a plain if/else statement consumes just 2.5 × 10⁻⁸ kWh (≈ 90 nJ per email), resulting in electricity costs of fractions of a penny. But step up to a lean BERT-style classifier and the same batch jumps to 2 kWh, and calling GPT-4o instead takes you to 300 kWh.

That’s a 12-billion-fold jump in both energy use and energy cost compared to the trivial deterministic approach (from about $0.000,000,0018 to $21 for the million-message batch).

| Scenario | Energy (kWh) for 1M emails | Electricity @ $0.07/kWh |

|---|---|---|

Deterministic rule (if sender ∈ safeList) |

2.5 × 10⁻⁸ | $0.000,000,0018 |

| Lean text-classifier (BERT-base, 120M params) | 2 | $0.14 |

| GPT-4o call (general LLM) | 300 | $21.00 |

Twenty-one bucks may not sound like much, but scaled across global email traffic, the environmental impact becomes absurd compared to the marginal benefit.

Why don’t we see these numbers factored into YC podcasts and TED Talk hype? Because the current system at play hides the real costs. VC funding and government energy incentives are subsidizing the true price of large-scale AI training and inference. Data center oversupply and artificially cheap electricity make LLMs look economical even when they are not.

Right now, the world is getting a free taste of AI before the real bill arrives. GPU cycles in the cloud seem cheap, only because the bill is split across three sources:

- VC burn: credits from OpenAI, Anthropic, and others

- Government energy incentives: US IRA 45X tax credits, UK £300M data center grants

- Overbuilt capacity: post-lockdown data centers chasing utilization

Switch off the subsidies, and that fun little “filter my email with GPT-4o” demo quickly peels your wallet open and gorges itself.

For a firm with 10,000 employees getting 120 emails each per day, that’s about 131 MWh a year, roughly $9,198 in electricity or a possible $876 in API fees (given GPT-4o published price ≈ $15 per 1 M output tokens → ≈ $2 per 1 M short emails). All to solve a problem that didn’t really exist.

And cost is not the only risk.

| Axis | Deterministic rule | LLM prediction |

|---|---|---|

| Energy / call | ≈ 90 nJ | 0.3 Wh |

| Failure mode | Mis-regex (easy to test) | Hallucination, jailbreak |

| Debug cost | Unit-test | Human review, legal exposure |

| Privacy risk | Stays on CPU | Ships raw inbox to third party |

I see nothing that could possibly go wrong.

Once the subsidies dry up and capacity contracts, the real price of large-scale AI usage will come due. Companies will be forced to reckon with the actual cost of running sprawling models for tasks that cheaper, more efficient methods could already handle. The illusion of affordability will collapse, and the rational manipulators will move on to engineer the next narrative.

But these costs aren’t a problem for vested interests, which is why it gets little airtime. The real mission is for rational manipulators to hook the world while the costs are subsidized, then control the supply once everyone is locked in.

That leads us to the next act.

Framing AI as a Battle of Good Versus Evil

In a recent TED talk, Eric Schmidt painted AI as a high-stakes moral showdown13, claiming it’s a battle of good versus evil, with bad things happening to the loser. His message is clear: superintelligence will emerge slowly at first, and then proliferate suddenly. There will then be winners and losers. “Losing” by even a single day, he warns, could mean catastrophic events, like rogue biological attacks or data centers being blown up. Schmidt even compares AI to a nuclear arms race and evokes Cold War imagery.

This is classic engineered consent. First, define the problem as so existential and terrifying that any dissent feels like betrayal. Then, position yourself or your company as the “good guys”, the only viable and ethical solution to the problem. Anything else will lead to (insert very bad things here).

Once the “problem” is defined in his TED Talk, which is “losing”, Schmidt rolls out the magic solution (and Bernays would be proud). Trust BIG TECH to solve THE PROBLEM with closed-source PROPRIETARY technologies.

In this talk, he paints open source AI as “dangerous”, a reckless gamble because the “enemy” could use it against us. Proprietary models and massive, centralized data centers become the only responsible choice, the last line of defense against chaos. It’s Bernays’ bacon campaign all over again. Instead of doctors selling you bacon, it’s billionaires selling you proprietary AI systems subsidized by governments. It’s not an opinion though, it’s expert consensus. “Trust us, we know things, scary things”.

When the rhetoric fades and the conversation shifts to hard costs (think energy, carbon, and water usage during training and inference), Schmidt leads with the admission that “there’s a real limit on energy”, adding “we need another 90 gigawatts of power in America.” His strawman proposal? “Think Canada,” because, as he puts it, “nice people, full of hydroelectric power.” In reality, even if this were possible in America’s current political climate, it simply shifts the environmental burden elsewhere.

Meanwhile, the conversation quickly pivots to the “real” problem.

“What’s the limit of knowledge? How do we invent something completely new?”

It’s the playbook. Acknowledge the challenges first to quickly minimize them, build popular support for governments and taxpayers to close the gap (“Please sir, may I have more subsidized data centers?”), then big, beautiful American tech CEOs will own even more of the market while others deal with the pesky business of power. (Forget the fact that even if all of this came true, those same data centers would be used to put more people out of work to boost corporate profits.)

The formula is obvious:

- Define the crisis

- “If we don’t win the AI race, the bad guys will unleash disaster.”

- “Open source models are dangerous.”

- Downplay the challenges

- “Think Canada. Nice people, full of hydroelectric power.”

- “Algorithms will become more efficient eventually.”

- Offer the magic solution

- “Trust our proprietary models and hyperscale infrastructure to save the day.”

- Engineer consent

- Flood the media with this story, making counterpoints seem naïve, unpatriotic, or uninformed.

Like how bacon was sold as “doctor-approved”, today’s AI tools are sold as “humanity-approved”. The environmental costs like soaring energy use, carbon footprints, and water consumption get buried under feel-good narratives of innovation and safety. The pitch is polished, the incentives clear, and the stakes engineered to seem too high to question.

The Promise of AI and Finding an Ethical Compass

About a year ago (at the time of this writing), an AI model identified an unconventional drug combination for a patient with a rare, life-threatening blood disorder, when no other treatment options remained. Within days, the treatment was working, and led to a recovery that would have been impossible without this discovery14.

This is a real, ethical application of AI to solve a real, human problem. Accelerating the search for life-saving treatments shows that AI can be a force for good. So, how do we build on this kind of promise, while resisting the pull of hype and manipulation?

As I navigate the shifting landscape of AI myself, I’ve found it helpful to establish my own personal ethical compass, an intentional set of first-principles to guide my work and decisions. This isn’t a manifesto to impose on others. It’s a personal framework, shaped by my own experiences. In fact, this article itself emerged from a decision informed by this compass. I decided it was worth the time to research and write, even if only a handful of people read it.

These aren’t rules to impose on others, nor a manifesto to sign. They’re reflections of what I believe will help ground us in integrity and balance amid the noise. Borrow from them if they resonate.

Champion Open Solutions

Embrace open data, open models, and open-source solutions to AI.

- Transparency fuels accountability.

- Transparency doesn’t preclude commercial success.

- Transparency means building commercial value atop foundations everyone can inspect, challenge, and improve.

Stay Vigilant Against Hype

Scrutinize every claim, whether from startups, corporations, or evangelists.

- Follow the money and incentives.

- Demand transparency in compute, energy, and environmental impact.

- Avoid black-box systems and opaque practices.

- Look for sustained contributions and community adoption, not hype.

Prioritize Humans

AI should augment, not replace, human decision-making.

- Keep human agency at the center of any system.

- Support human practitioners rather than displacing them.

- Prioritize human dignity and care in design.

Invest in Hard Skills

Equip yourself and your teams with deep technical and domain knowledge.

- Prioritize reasoning, design, and explainability.

- Resist manipulation and vendor lock-in.

- Build resilience through knowledge and skill.

Promote Education

Reject engineered narratives and support transparent education.

- Prioritize education over indoctrination.

- Demand humility and honesty in narratives.

- Treat transparency as a responsibility, not a feature.

Promote Sustainability

Innovate boldly, but weigh environmental, economic, and societal impacts.

- Recognize the illusion of false “AI efficiency”.

- Embrace long-term sustainable practices.

- Make choices that support both progress and the planet.

Preserve Integrity

Stay anchored in a long-term vision of serving society.

- Resist easy shortcuts driven by hype.

- Build systems that prioritize societal needs over profit.

- Remember that doing good can also lead to doing well.

Demand Proof

Insist on proof of real-world effectiveness, efficiency, and fairness.

- Compare compute costs to deterministic baselines (

if/elselogic). - Evaluate reliability, error profiles, and mitigation costs.

- Trace financial incentives behind narratives (follow the money).

By keeping this compass in mind, I hope we can balance AI’s genuine promise to improve lives with the realities of how narratives, incentives, and human nature shape its development.

The answer isn’t to reject AI outright, nor to blindly accept the hype. It’s to cultivate intentional, informed skepticism. History teaches us that propaganda thrives when people either panic or disengage. The electric current wars remind us that we can push back against fear-mongering, highlight hidden costs, and still champion innovation that genuinely serves society.

We can approach AI with the same mindset. Recognize its value as a tool. Demand transparency in its models and accountability for its impacts. Resist surrendering decisions to algorithms we can’t audit. Keep human judgement as the final layer of oversight.

These steps won’t solve every problem, but they’ll help you separate real innovation from the noise.

Conclusion

I don’t claim to have all the answers. My goal with this series is to spark reflection and sharpen our collective understanding. The deeper I dug into this topic, the more I realized how little of the broader picture is visible from day-to-day tech conversations.

For the open-source community and technologists at large, the path forward is to stay grounded, to innovate ethically, and to build systems that serve society, not only profit margins of already rich tech dynasties.

Remember that the sky has been falling since the beginning of human storytelling. Every age has faced its own version of doom and disruption. And yet, we always manage a path forward. Stay curious, ask hard questions, and resist being swept up by the loudest voices.

So let’s keep building. Let’s keep asking. Let’s stay vigilant and hopeful. History doesn’t always repeat itself, but it always invites us to learn from it.

-

See Quartz, “Tech Layoffs Triggered By Tax Code Changes” for an overview of how Section 174 quietly reshaped tech company spending. ↩︎ ↩︎

-

Manish Raghavan, Solon Barocas, Jon Kleinberg, and Karen Levy, Mitigating Bias in Algorithmic Hiring: Evaluating Claims and Practices, 2019. ↩︎

-

Lex Fridman and ThePrimeagen, Will AI Replace Programmers? YouTube ↩︎

-

Karen Hao, Will Sam Altman and His AI Kill Us All? YouTube ↩︎

-

Strubell, E., Ganesh, A., & McCallum, A. (2019). Energy and Policy Considerations for Deep Learning in NLP. ACL ↩︎

-

Patterson, D., et al. (2021). Carbon Emissions and Large Neural Network Training. arXiv:2104.10350 ↩︎

-

Luccioni, A. S., Viguier, S., & Ligozat, A.-L. (2022). Estimating the Carbon Footprint of BLOOM, a 176B Parameter Language Model. arXiv:2211.02001 ↩︎

-

AI Finds New Uses for Old Drugs to Save Lives (New York Times, March 20, 2025) Read article ↩︎